The GitHub Sampler

GitHub is the world's largest developer platform, making it a compelling place to build developer tools and study software development. However, with over half a billion repositories and an API that limits responses to a thousand results, it feels nigh impossible to answer even simple questions like, "How often is a Python library used (in public GitHub projects)?"

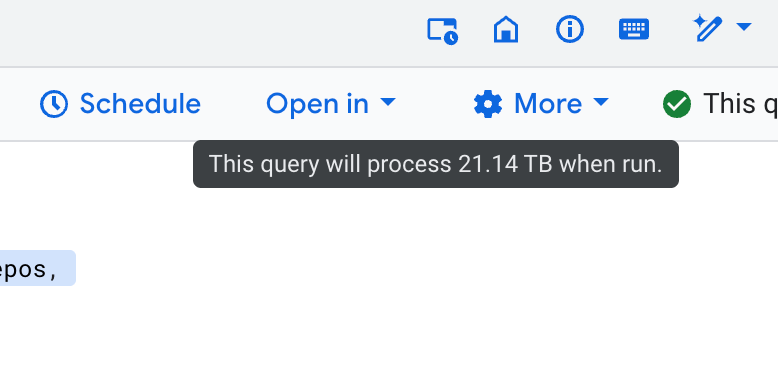

Playing it fast and loose by taking a census of GHArchive tables on BigQuery is not an option. (It would cost about $125 at $6.25/TB.) We could `wget` GHArchive, though remember that it could be tens of terabytes of data.

Necessity, as they say, is the mother of invention. Before we had the computational resources to study big data using big data, we relied on sampling and the Central Limit Theorem. The question is, how do we sample random repositories? The answer is surprisingly simple. Randomly sample days since the beginning, download, deduplicate, filter to `CreateEvents', and then take a weighted random sample with weights proportional to the number of CreateEvents. (You could do the same on BigQuery.) (You can also sample from the commits table if your estimand was active GitHub repositories.)

The other option is to exploit the fact that GitHub uses an auto-incremented variable to identify repositories at the backend.

Here's a Python package that implements the methods discussed here. And a GitHub workflow that is iteratively improving the precision of the estimate of "How often is a Python library used (in public GitHub projects)?"

p.s. see also https://github.com/github/innovationgraph